Kubernetes Policy-as-Code using Kyverno and OPA Gatekeeper (Part 1)

This project involves using a deliberately vulnerable bank application, VULN-BANK by Commando-X (GitHub) and securing its Kubernetes deployment using Kyverno and Open Policy Agent (OPA) Gatekeeper as Policy-as-Code tools. The goal is to deploy the VULN-BANK application and enforce organisational and compliance-aligned security policies directly within the cluster to ensure all application containers run as non-root users and automatically inject memory and CPU limits if not defined and prevent applications from running in privileged mode due to compliance with regulatory frameworks (e.g., PCI DSS or ISO 27001).

Two Key Policy Use-Cases Implemented

- Kyverno: Enforce that all containers run as non-root users and automatically inject CPU and memory limits if not defined.

- OPA Gatekeeper: Prevent Pods from using

hostNetwork: trueor running in privileged mode, protecting the host and meeting compliance standards like PCI DSS and ISO 27001.

This guide is broken down into three phases:

- Setting up the Environment

- Deploying the Insecure VULN-BANK App in Kubernetes

- Enforcing a Security Policy

Setting up the Environment

Prerequisites

Install these tools:

- Git

- Docker / Docker Desktop

- Kubectl: command-line tool for interacting with a Kubernetes cluster

- kind (Kubernetes in Docker, for a local Kubernetes cluster)

- Helm: package manager for Kubernetes. Think of it like apt or brew, but for Kubernetes applications. It bundles all the necessary YAML files and configurations into a package called a chart

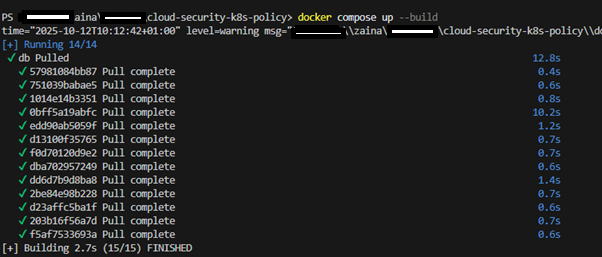

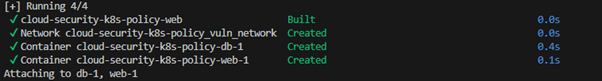

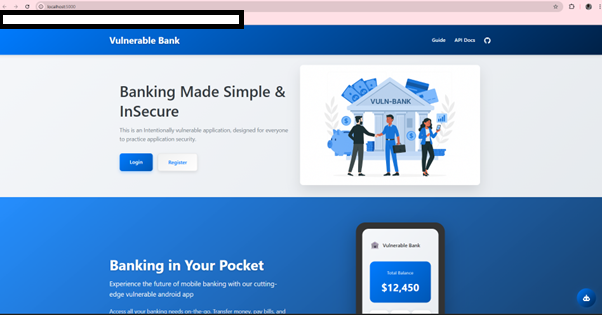

Getting started, spin up the app following the documentation (GitHub) and run the Docker Container locally to make sure the app is working.

N.B: Stop the docker Container to avoid too much computational workload on the system as we are only checking the app runs.

1

2

docker ps

docker stop <container_id>

Create a Local Kubernetes Cluster with kind and Prepare the Image

We will use kind to create a local Kubernetes cluster inside Docker containers so we can run everything locally without cloud costs.

1) Create a kind Cluster

In project root folder, create kind-config.yaml, copy and paste the code below into it:

1

2

3

4

5

6

7

8

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

extraPortMappings:

- containerPort: 3000

hostPort: 3000

protocol: TCP

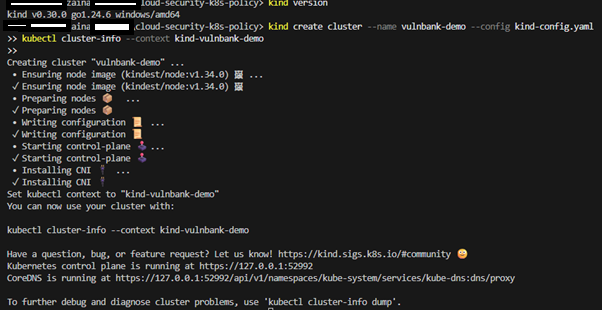

Run the commands below in terminal:

1

2

kind create cluster --name vulnbank-demo --config kind-config.yaml

kubectl cluster-info --context kind-vulnbank-demo

This creates a cluster where port 3000 in the cluster is reachable on the host at localhost:3000.

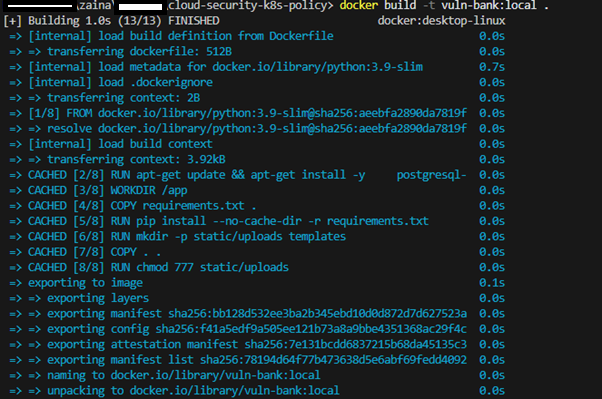

2) Build the VulnBank Image and Load it into kind

By default, kind cannot pull local Docker images from the host; either push to a registry or load with kind load. From project root, run the cmd below:

1

docker build -t vuln-bank:local .

1

2

3

4

5

6

7

8

9

10

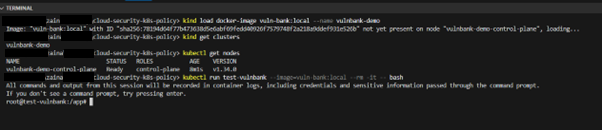

11

# load the image into kind

kind load docker-image vuln-bank:local --name vulnbank-demo

# check the status of kind clusters

kind get clusters

# check the status of Kubernetes nodes

kubectl get nodes

# run a temporary container for testing and attach an interactive bash shell to it

kubectl run test-vulnbank --image=vuln-bank:local --rm -it --bash

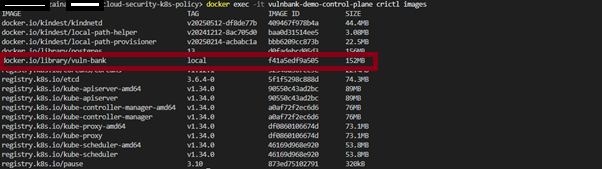

Each KIND cluster node is a Docker Container. To check images inside it:

1

docker ps

Then list images inside vulnbank control plane:

1

docker exec -it vulnbank-demo-control-plane crictl images

Deploying the Insecure VULN-BANK App

Using Kubernetes, we will deploy the application in its default vulnerable configuration, which often means running the Container processes as root.

Create Kubernetes Manifests for VulnBank

Create a directory k8s/ in project and add the following files:

k8s/deployment.yamlk8s/service.yamlk8s/db.yaml

The files content can be found here: GitHub Repository

This simple configuration deploys the application with minimal settings, and importantly, it does not explicitly set a non-root user, which defaults to the insecure root user based on the Container image’s configuration.

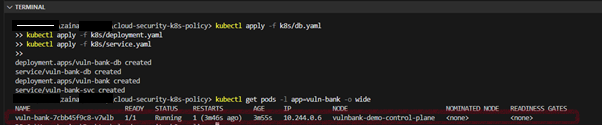

Apply the manifests created:

1

2

3

kubectl apply -f k8s/deployment.yaml

kubectl apply -f k8s/service.yaml

kubectl apply -f k8s/db.yaml

Check and confirm the Pods are running:

1

kubectl get Pods -l app=vuln-bank -o wide

You should see a Pod with the name pattern vulnbank-XXXXX in the Running status. At this point, the VULN-BANK application is running in an insecure configuration (as root).

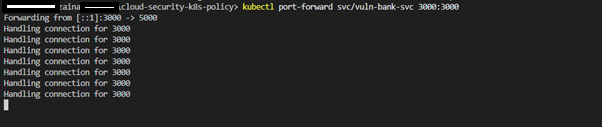

Forward the port to match port in kind-config.yaml:

1

kubectl port-forward svc/vuln-bank-svc 3000:3000

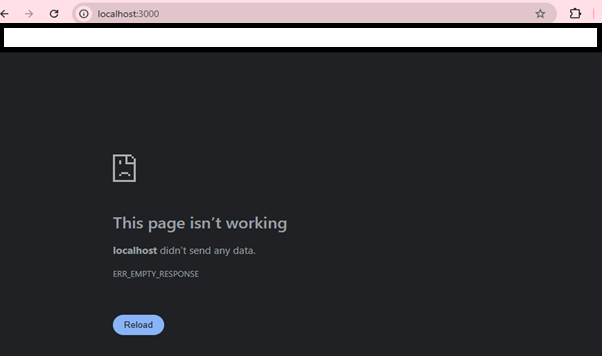

Then open http://localhost:3000 to view the VulnBank app.

Security Policy Enforcement

Now, we will use Kyverno to enforce a rule that prevents the Pod from running as the root user (UID 0), aligning with the Kubernetes security best practice of “Run as Non-Root.”

Use Case: Enforce Non-Root Containers and Add Resource Limits Automatically.

Scenario: As a security professional, we want to ensure all application containers deployed by the development team in the organisation run as non-root users and automatically inject memory and CPU limits.

Kyverno – Installation, Policies Enforcement and Testing

Kyverno is the policy engine for Kubernetes-native that allows validation, mutation, generation of resources and defining security rules using Kubernetes YAML.

There are two common ways for installing Kyverno: Helm (recommended for production) or raw YAML for quick demo. For the purpose of this documentation, we will install via Helm.

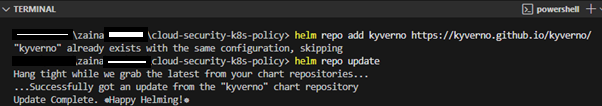

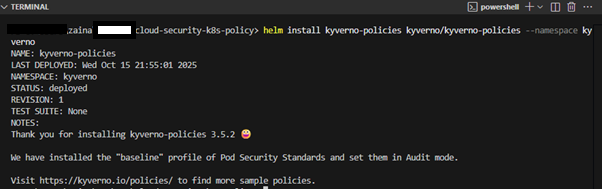

Step 1: Install Kyverno with Helm

1

helm repo add kyverno https://kyverno.github.io/kyverno/

Next, update the Helm repositories to make sure chart information is up to date:

1

helm repo update

1

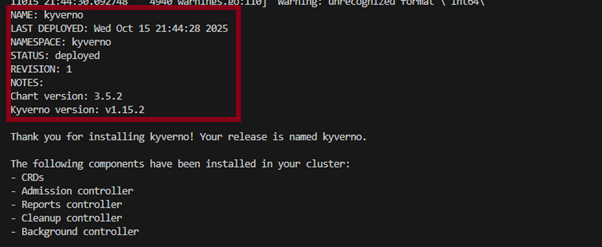

helm install kyverno kyverno/kyverno --namespace kyverno --create-namespace

1

2

# optional: install kyverno-policies (prebuilt policy sets)

helm install kyverno-policies kyverno/kyverno-policies --namespace kyverno

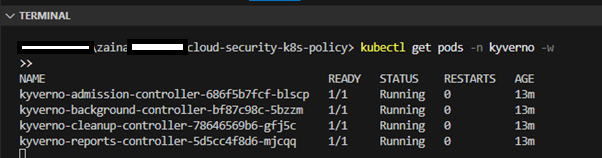

Verify Kyverno Installation

To watch the status of the Pods:

1

kubectl get Pods -n kyverno -w

Step 2: Create Kyverno Policies

1. Policy to Enforce Non-Root Containers

This is a validation policy to deny any resource (like a Pod or Deployment) that tries to run a Container as the root user (runAsUser: 0) or does not explicitly set runAsNonRoot: true. In project folder, create k8s-policies/kyverno/require-non-root.yaml:

The file content can be found here: GitHub Repository

background: true used so the policy will scan and report on all pre-existing resources in the cluster that violate the rule. It runs as a background process after we have created the policy.

Note: Kyverno provides many ready-made examples and best-practice policies (require non-root, require limits, etc.). Use

validationFailureAction: auditfirst if you want to observe violations without blocking.

2. Policy to Inject Default Resource Limits

This is a mutation policy that automatically adds requests and limits if they are missing. In project folder, create k8s-policies/kyverno/inject-default-resources.yaml:

The file content can be found here: GitHub Repository

Note:

patchStrategicMergeis Kyverno’s supported way to inject fields; it will not overwrite existing limits but adds missing fields.

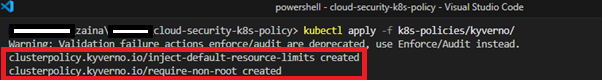

Step 3: Apply the Kyverno Policies to the Cluster

1

kubectl apply -f k8s-policies/kyverno/

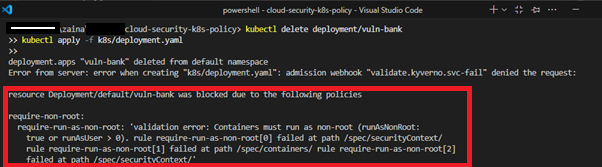

Step 4: Test Kyverno Policy Enforcement with VulnBank Manifest

1. Validation

Now we will re-deploy the application by deleting the previous deployment. Kyverno will check runAsNonRoot with validationFailureAction: enforce, deployment will be denied.

1

2

kubectl delete deployment/vuln-bank

kubectl apply -f k8s/deployment.yaml

Since the policy is now in place, the Kubernetes API server checked the policy before applying the manifest and we’re presented with an error message above, confirming the policy is blocking the insecure configuration. Try accessing the web app url, it will no longer be accessible.

2. Mutation

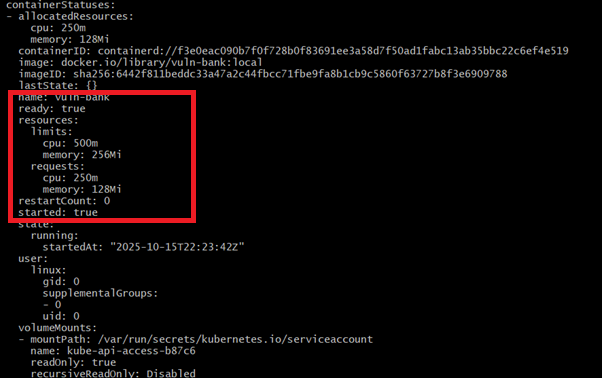

We will check the Pod for resource limits and observe injection. The Container in the Pod now has resources.requests and resources.limits injected, per the mutation policy.

To view the Pod, run the cmd below:

1

kubectl get Pod -l app=mutate-test -o yaml | sed -n '1,200p'

Inspect the ‘resources’ block inside the Container: Kyverno should have injected the default requests/limits.

Why These Policies Matter

- Running as root inside a Container significantly increases the blast radius of any compromise, if an attacker escapes the Container or exploits a vulnerability, they could gain host-level access.

- Enforcing non-root execution ensures applications run with the least privilege principle, one of the cornerstones of Container security and compliance frameworks like CIS Benchmarks and ISO 27001.

- Developers could forget to define CPU and memory limits, which can lead to resource exhaustion or denial of service in multi-tenant clusters. By automatically injecting safe defaults, we ensure fair resource allocation, stability and predictable scheduling.

Together, these Kyverno policies not only protect the cluster from insecure configurations but also reduce human error by automatically enforcing standards during deployment.

Continue to [Part 2] where we implement OPA Gatekeeper policies and CI/CD integration.